We’ll default this value to be 32 data points per mini-batch. We have already reviewed both the -epochs (number of epochs) and -alpha (learning rate) switch from the vanilla gradient descent example - but also notice we are introducing a third switch: -batch-size, which as the name indicates is the size of each of our mini-batches. Next, we can parse our command line arguments: # construct the argument parse and parse the argumentsĪp.add_argument("-e", "-epochs", type=float, default=100,Īp.add_argument("-a", "-alpha", type=float, default=0.01,Īp.add_argument("-b", "-batch-size", type=int, default=32, Lines 34 and 35 then loop over the training examples, yielding subsets of both X and y as mini-batches.

#Batch gradient descent update#

So, why bother using batch sizes > 1? To start, batch sizes > 1 help reduce variance in the parameter update ( ), leading to a more stable convergence. Typical batch sizes include 32, 64, 128, and 256. However, we often use mini-batches that are > 1.

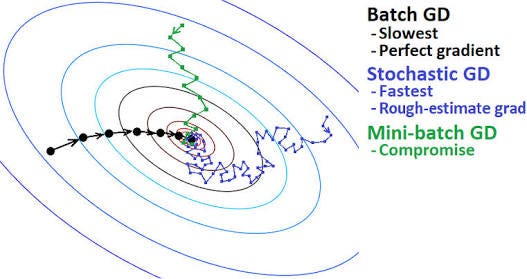

In a “purist” implementation of SGD, your mini-batch size would be 1, implying that we would randomly sample one data point from the training set, compute the gradient, and update our parameters. From an implementation perspective, we also try to randomize our training samples before applying SGD since the algorithm is sensitive to batches.Īfter looking at the pseudocode for SGD, you’ll immediately notice an introduction of a new parameter: the batch size. We evaluate the gradient on the batch, and update our weight matrix W. Instead of computing our gradient over the entire data set, we instead sample our data, yielding a batch. The only difference between vanilla gradient descent and SGD is the addition of the next_training_batch function. Wgradient = evaluate_gradient(loss, batch, W) We can update the pseudocode to transform vanilla gradient descent to become SGD by adding an extra function call: while True: Instead, what we should do is batch our updates. It also turns out that computing predictions for every training point before taking a step along our weight matrix is computationally wasteful and does little to help our model coverage. For image datasets such as ImageNet where we have over 1.2 million training images, this computation can take a long time. The reason for this slowness is because each iteration of gradient descent requires us to compute a prediction for each training point in our training data before we are allowed to update our weight matrix. Reviewing the vanilla gradient descent algorithm, it should be (somewhat) obvious that the method will run very slowly on large datasets.

#Batch gradient descent code#

Looking for the source code to this post? Jump Right To The Downloads Section Mini-batch SGD

0 kommentar(er)

0 kommentar(er)